b9d6dff9877c722c4c3b0948fbbd2fc9e655dfd6

DDT: Decoupled Diffusion Transformer

Introduction

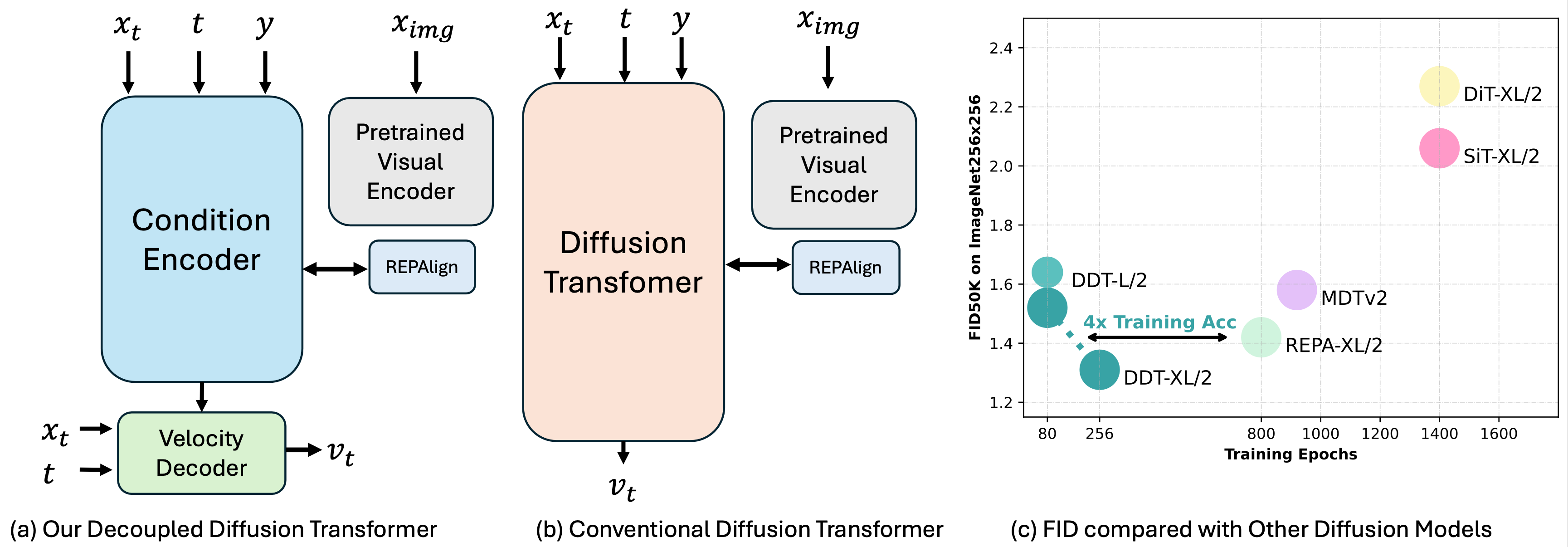

We decouple diffusion transformer into encoder-decoder design, and surpresingly that a more substantial encoder yields performance improvements as model size increases.

- We achieves 1.26 FID on ImageNet256x256 Benchmark with DDT-XL/2(22en6de).

- We achieves 1.28 FID on ImageNet512x512 Benchmark with DDT-XL/2(22en6de).

- As a byproduct, our DDT can reuse encoder among adjacent steps to accelerate inference.

Visualizations

Checkpoints

We take the off-shelf VAE to encode image into latent space, and train the decoder with DDT.

| Dataset | Model | Params | FID | HuggingFace |

|---|---|---|---|---|

| ImageNet256 | DDT-XL/2(22en6de) | 675M | 1.26 | 🤗 |

| ImageNet512 | DDT-XL/2(22en6de) | 675M | 1.28 | 🤗 |

Online Demos

We provide online demos for DDT-XL/2(22en6de) on HuggingFace Spaces.

HF spases: https://huggingface.co/spaces/MCG-NJU/DDT

Usages

We use ADM evaluation suite to report FID.

# for installation

pip install -r requirements.txt

# for inference

python main.py predict -c configs/repa_improved_ddt_xlen22de6_256.yaml --ckpt_path=XXX.ckpt

# for training

# extract image latent (optional)

python3 tools/cache_imlatent4.py

# train

python main.py fit -c configs/repa_improved_ddt_xlen22de6_256.yaml

Reference

@article{wang2025ddt,

title={DDT: Decoupled Diffusion Transformer},

author={Wang, Shuai and Tian, Zhi and Huang, Weilin and Wang, Limin},

journal={arXiv preprint arXiv:2504.05741},

year={2025}

}

Acknowledgement

The code is mainly built upon FlowDCN, we also borrow ideas from the REPA, MAR and SiT.

Description

Languages

Python

99.8%

Shell

0.2%