5c3867ffdcdf37de4f75b7fdb3d89e2fcba486f1

DDT: Decoupled Diffusion Transformer

arxiv link: https://arxiv.org/abs/2504.05741

ImagNet256 leaderboard: https://paperswithcode.com/sota/image-generation-on-imagenet-256x256

ImagNet512 leaderboard: https://paperswithcode.com/sota/image-generation-on-imagenet-512x512

Introduction

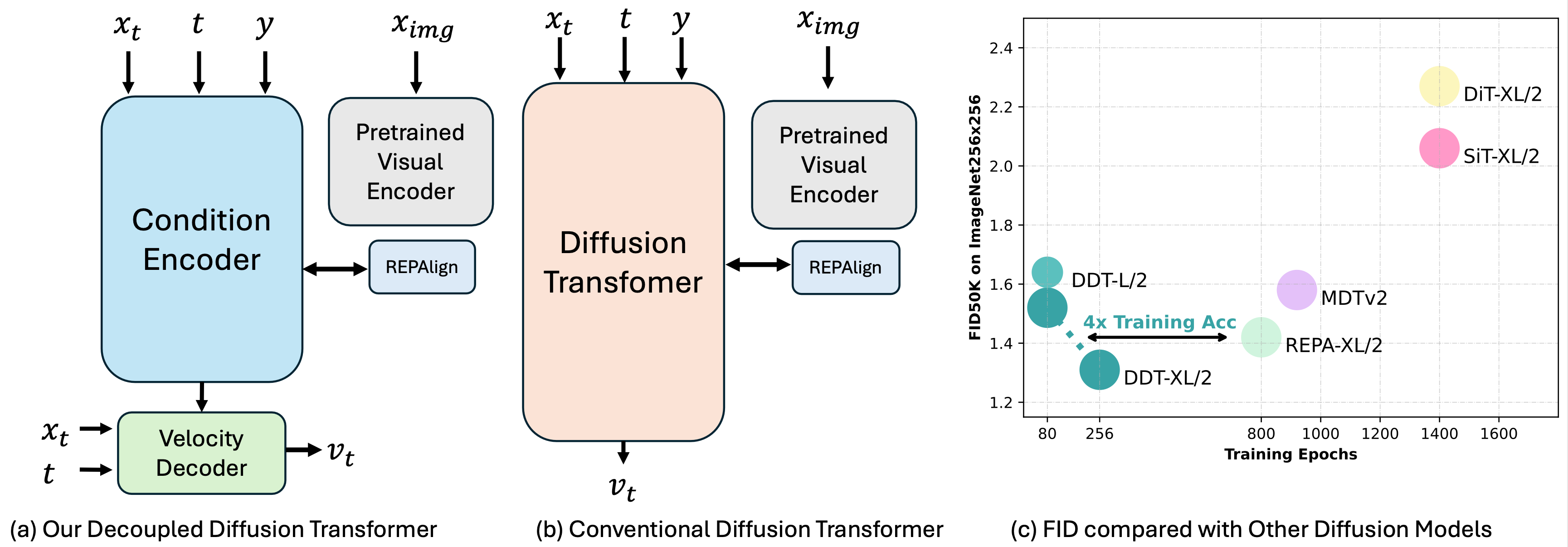

We decouple diffusion transformer into encoder-decoder design, and surpresingly that a more substantial encoder yields performance improvements as model size increases.

- We achieves 1.26 FID on ImageNet256x256 Benchmark with DDT-XL/2(22en6de).

- We achieves 1.28 FID on ImageNet512x512 Benchmark with DDT-XL/2(22en6de).

- As a byproduct, our DDT can reuse encoder among adjacent steps to accelerate inference.

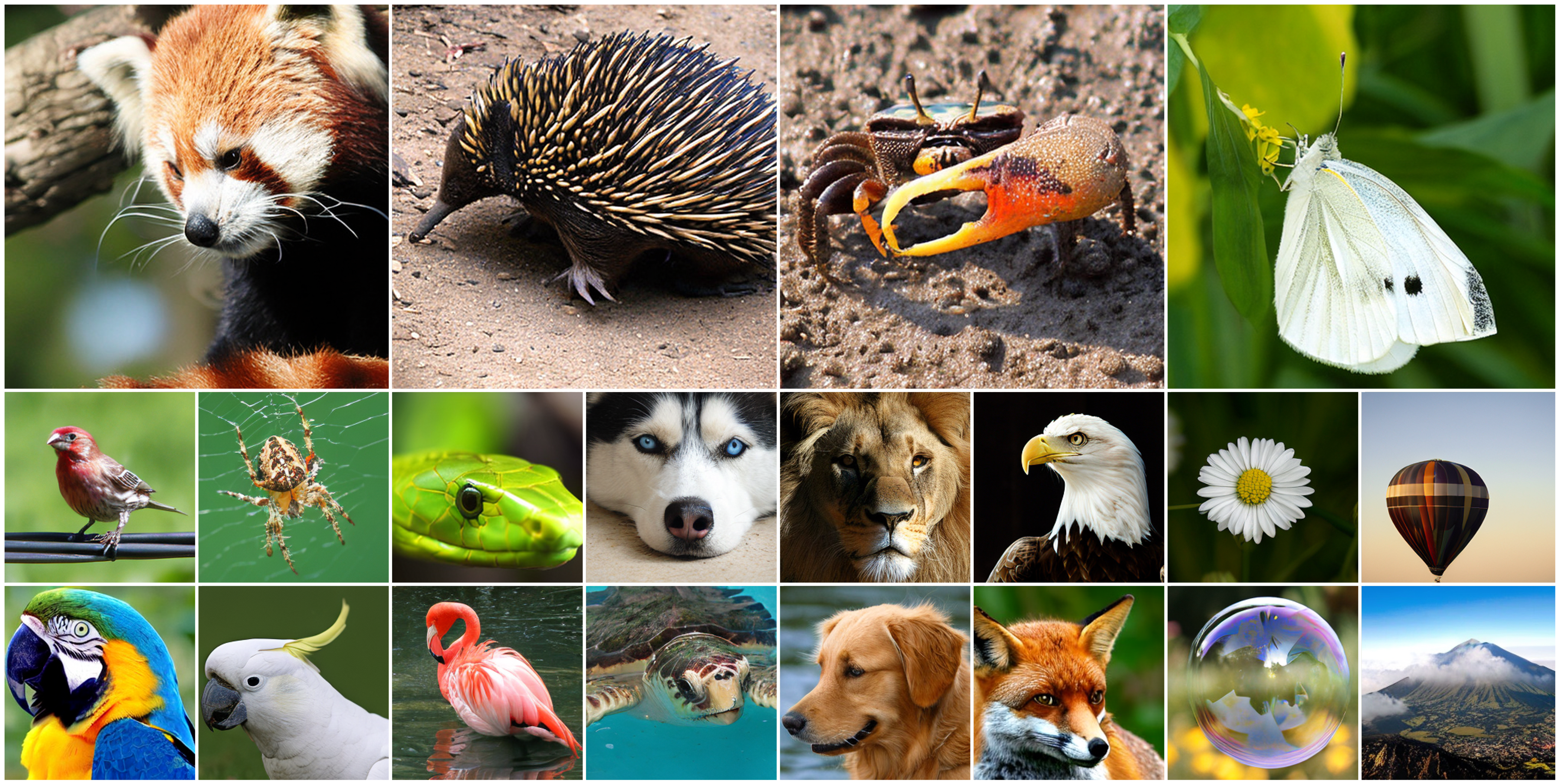

Visualizations

Checkpoints

Waiting for release.

Usgae

We use ADM evaluation suite to report FID.

# for installation

pip install -r requirements.txt

# for training

python main.py fit -c configs/repa_improved_ddt_xlen22de6_256.yaml

# for inference

python main.py predict -c configs/repa_improved_ddt_xlen22de6_256.yaml --ckpt_path=XXX.ckpt

Reference

@ARTICLE{ddt,

title = "DDT: Decoupled Diffusion Transformer",

author = "Wang, Shuai and Tian, Zhi and Huang, Weilin and Wang, Limin",

month = apr,

year = 2025,

archivePrefix = "arXiv",

primaryClass = "cs.CV",

eprint = "2504.05741"

}

Description

Languages

Python

99.8%

Shell

0.2%