{

"cells": [

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# TimesNet Tutorial\n",

"**Set-up instructions:** this notebook give a tutorial on the learning task supported by `TimesNet`.\n",

"\n",

"`TimesNet` can support basically 5 tasks, which are respectively long-term forecast, short-term forecast, imputation, anomaly detection, classification."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### 1. Install Python 3.8. For convenience, execute the following command."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"vscode": {

"languageId": "shellscript"

}

},

"outputs": [],

"source": [

"pip install -r requirements.txt"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### 2. Package Import"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"import torch \n",

"import torch.nn as nn\n",

"import torch.nn.functional as F\n",

"import torch.fft\n",

"from layers.Embed import DataEmbedding\n",

"from layers.Conv_Blocks import Inception_Block_V1 \n",

" #convolution block used for convoluting the 2D time data, changeable"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### 3. TimesBlock Construction\n",

" The core idea of `TimesNet` lies in the construction of `TimesBlock`, which generally gets the base frequencies by implementing FFT on the data, and then reshapes the times series to 2D variation respectively from the main base frequencies, followed by a 2D convolution whose outputs are reshaped back and added with weight to form the final output.\n",

"\n",

" In the following section, we will have a detailed view on `TimesBlock`.\n",

"\n",

" TimesBlock has 2 members. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"class TimesBlock(nn.Module):\n",

" def __init__(self, configs):\n",

" ...\n",

" \n",

" def forward(self, x):\n",

" ..."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"First, let's focus on ```__init__(self, configs):```"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def __init__(self, configs): ##configs is the configuration defined for TimesBlock\n",

" super(TimesBlock, self).__init__() \n",

" self.seq_len = configs.seq_len ##sequence length \n",

" self.pred_len = configs.pred_len ##prediction length\n",

" self.k = configs.top_k ##k denotes how many top frequencies are \n",

" #taken into consideration\n",

" # parameter-efficient design\n",

" self.conv = nn.Sequential(\n",

" Inception_Block_V1(configs.d_model, configs.d_ff,\n",

" num_kernels=configs.num_kernels),\n",

" nn.GELU(),\n",

" Inception_Block_V1(configs.d_ff, configs.d_model,\n",

" num_kernels=configs.num_kernels)\n",

" )"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Then, have a look at ```forward(self, x)```"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def forward(self, x):\n",

" B, T, N = x.size()\n",

" #B: batch size T: length of time series N:number of features\n",

" period_list, period_weight = FFT_for_Period(x, self.k)\n",

" #FFT_for_Period() will be shown later. Here, period_list([top_k]) denotes \n",

" #the top_k-significant period and period_weight([B, top_k]) denotes its weight(amplitude)\n",

"\n",

" res = []\n",

" for i in range(self.k):\n",

" period = period_list[i]\n",

"\n",

" # padding : to form a 2D map, we need total length of the sequence, plus the part \n",

" # to be predicted, to be divisible by the period, so padding is needed\n",

" if (self.seq_len + self.pred_len) % period != 0:\n",

" length = (\n",

" ((self.seq_len + self.pred_len) // period) + 1) * period\n",

" padding = torch.zeros([x.shape[0], (length - (self.seq_len + self.pred_len)), x.shape[2]]).to(x.device)\n",

" out = torch.cat([x, padding], dim=1)\n",

" else:\n",

" length = (self.seq_len + self.pred_len)\n",

" out = x\n",

"\n",

" # reshape: we need each channel of a single piece of data to be a 2D variable,\n",

" # Also, in order to implement the 2D conv later on, we need to adjust the 2 dimensions \n",

" # to be convolutioned to the last 2 dimensions, by calling the permute() func.\n",

" # Whereafter, to make the tensor contiguous in memory, call contiguous()\n",

" out = out.reshape(B, length // period, period,\n",

" N).permute(0, 3, 1, 2).contiguous()\n",

" \n",

" #2D convolution to grap the intra- and inter- period information\n",

" out = self.conv(out)\n",

"\n",

" # reshape back, similar to reshape\n",

" out = out.permute(0, 2, 3, 1).reshape(B, -1, N)\n",

" \n",

" #truncating down the padded part of the output and put it to result\n",

" res.append(out[:, :(self.seq_len + self.pred_len), :])\n",

" res = torch.stack(res, dim=-1) #res: 4D [B, length , N, top_k]\n",

"\n",

" # adaptive aggregation\n",

" #First, use softmax to get the normalized weight from amplitudes --> 2D [B,top_k]\n",

" period_weight = F.softmax(period_weight, dim=1) \n",

"\n",

" #after two unsqueeze(1),shape -> [B,1,1,top_k],so repeat the weight to fit the shape of res\n",

" period_weight = period_weight.unsqueeze(\n",

" 1).unsqueeze(1).repeat(1, T, N, 1)\n",

" \n",

" #add by weight the top_k periods' result, getting the result of this TimesBlock\n",

" res = torch.sum(res * period_weight, -1)\n",

"\n",

" # residual connection\n",

" res = res + x\n",

" return res"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The ```FFT_for_Period``` above is given by:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def FFT_for_Period(x, k=2):\n",

" # xf shape [B, T, C], denoting the amplitude of frequency(T) given the datapiece at B,N\n",

" xf = torch.fft.rfft(x, dim=1) \n",

"\n",

" # find period by amplitudes: here we assume that the periodic features are basically constant\n",

" # in different batch and channel, so we mean out these two dimensions, getting a list frequency_list with shape[T] \n",

" # each element at pos t of frequency_list denotes the overall amplitude at frequency (t)\n",

" frequency_list = abs(xf).mean(0).mean(-1) \n",

" frequency_list[0] = 0\n",

"\n",

" #by torch.topk(),we can get the biggest k elements of frequency_list, and its positions(i.e. the k-main frequencies in top_list)\n",

" _, top_list = torch.topk(frequency_list, k)\n",

"\n",

" #Returns a new Tensor 'top_list', detached from the current graph.\n",

" #The result will never require gradient.Convert to a numpy instance\n",

" top_list = top_list.detach().cpu().numpy()\n",

" \n",

" #period:a list of shape [top_k], recording the periods of mean frequencies respectively\n",

" period = x.shape[1] // top_list\n",

"\n",

" #Here,the 2nd item returned has a shape of [B, top_k],representing the biggest top_k amplitudes \n",

" # for each piece of data, with N features being averaged.\n",

" return period, abs(xf).mean(-1)[:, top_list] "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

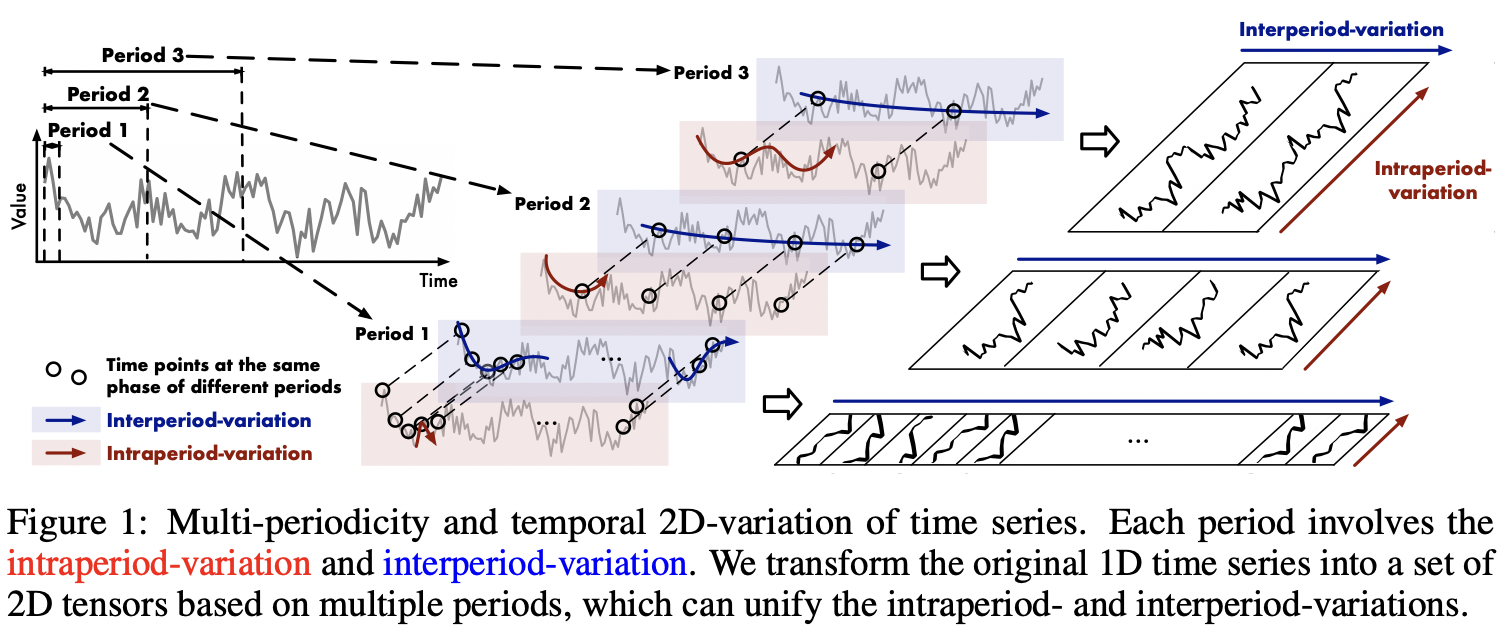

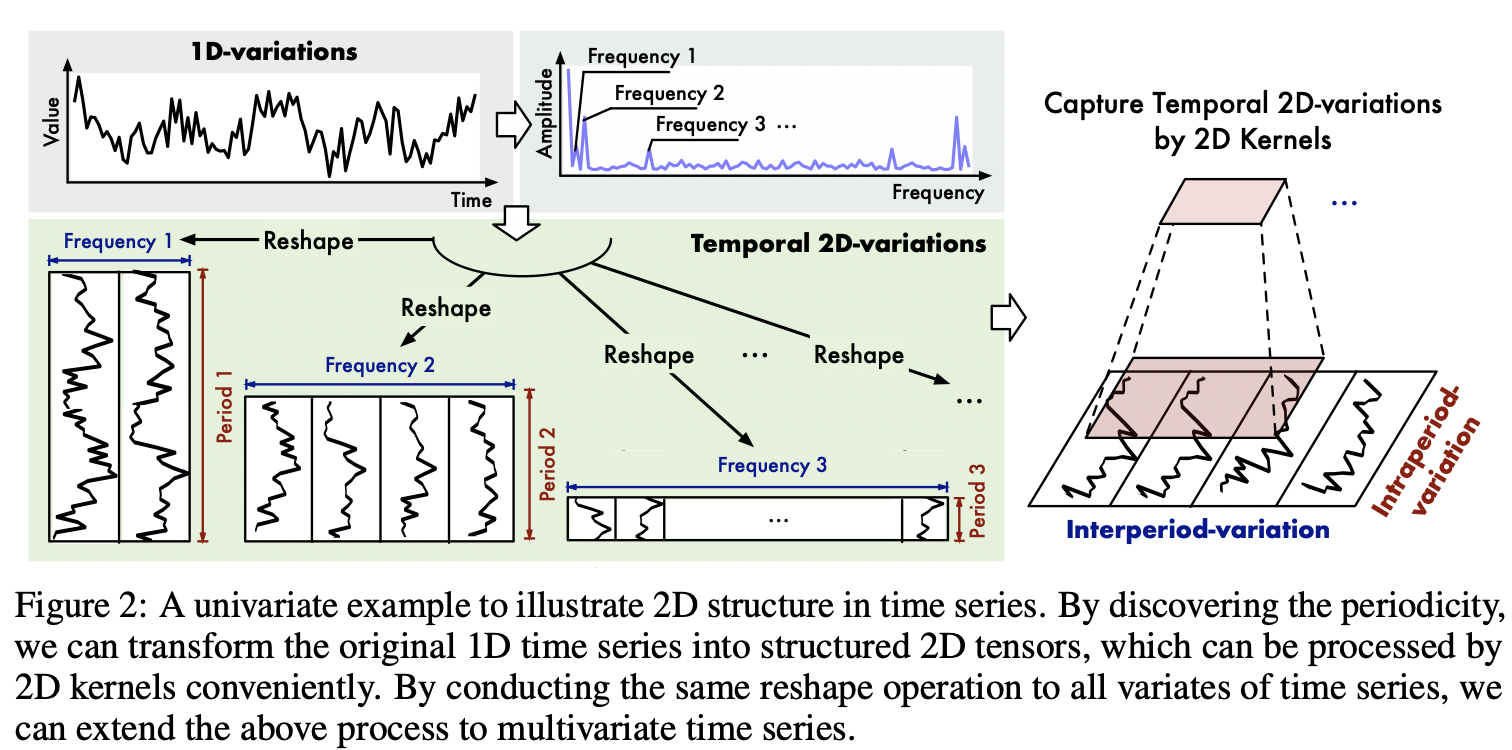

"To make it clearer, please see the figures below.\n",

"\n",

"\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"For more details, please read the our paper \n",

"(link: https://openreview.net/pdf?id=ju_Uqw384Oq)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### 4. TimesNet\n",

"\n",

"So far we've got `TimesBlock`, which is excel at retrieving intra- and inter- period temporal information. We become capable of building a `TimesNet`. `TimesNet` is proficient in multitasks including short- and long-term forecasting, imputation, classification, and anomaly detection.\n",

"\n",

"In this section, we'll have a detailed overview on how `TimesNet` gains its power in these tasks."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"class Model(nn.Module):\n",

" def __init__(self, configs):\n",

" ...\n",

" \n",

" def forecast(self, x_enc, x_mark_enc, x_dec, x_mark_dec):\n",

" ...\n",

"\n",

" def imputation(self, x_enc, x_mark_enc, x_dec, x_mark_dec, mask):\n",

" ...\n",

"\n",

" def anomaly_detection(self, x_enc):\n",

" ...\n",

" \n",

" def classification(self, x_enc, x_mark_enc):\n",

" ...\n",

"\n",

" def forward(self, x_enc, x_mark_enc, x_dec, x_mark_dec, mask=None):\n",

" ..."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"First of all, let's focus on ```__init__(self, configs):```"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def __init__(self, configs):\n",

" super(Model, self).__init__()\n",

" #params init\n",

" self.configs = configs\n",

" self.task_name = configs.task_name\n",

" self.seq_len = configs.seq_len\n",

" self.label_len = configs.label_len\n",

" self.pred_len = configs.pred_len\n",

"\n",

" #stack TimesBlock for e_layers times to form the main part of TimesNet, named model\n",

" self.model = nn.ModuleList([TimesBlock(configs)\n",

" for _ in range(configs.e_layers)])\n",

" \n",

" #embedding & normalization\n",

" # enc_in is the encoder input size, the number of features for a piece of data\n",

" # d_model is the dimension of embedding\n",

" self.enc_embedding = DataEmbedding(configs.enc_in, configs.d_model, configs.embed, configs.freq,\n",

" configs.dropout)\n",

" self.layer = configs.e_layers # num of encoder layers\n",

" self.layer_norm = nn.LayerNorm(configs.d_model)\n",

"\n",

" #define the some layers for different tasks\n",

" if self.task_name == 'long_term_forecast' or self.task_name == 'short_term_forecast':\n",

" self.predict_linear = nn.Linear(\n",

" self.seq_len, self.pred_len + self.seq_len)\n",

" self.projection = nn.Linear(\n",

" configs.d_model, configs.c_out, bias=True)\n",

" if self.task_name == 'imputation' or self.task_name == 'anomaly_detection':\n",

" self.projection = nn.Linear(\n",

" configs.d_model, configs.c_out, bias=True)\n",

" if self.task_name == 'classification':\n",

" self.act = F.gelu\n",

" self.dropout = nn.Dropout(configs.dropout)\n",

" self.projection = nn.Linear(\n",

" configs.d_model * configs.seq_len, configs.num_class)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### 4.1 Forecast\n",

"\n",

"The basic idea of forecasting is to lengthen the known sequence to (seq_len+pred_len), which is the total length after forecasting. Then by several TimesBlock layers together with layer normalization, some underlying intra- and inter- period information is represented. With these information, we can project it to the output space. Whereafter by denorm ( if Non-stationary Transformer) we get the final output."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def forecast(self, x_enc, x_mark_enc, x_dec, x_mark_dec):\n",

" # Normalization from Non-stationary Transformer at temporal dimension\n",

" means = x_enc.mean(1, keepdim=True).detach() #[B,T]\n",

" x_enc = x_enc - means\n",

" stdev = torch.sqrt(\n",

" torch.var(x_enc, dim=1, keepdim=True, unbiased=False) + 1e-5)\n",

" x_enc /= stdev\n",

"\n",

" # embedding: projecting a number to a C-channel vector\n",

" enc_out = self.enc_embedding(x_enc, x_mark_enc) # [B,T,C] C is d_model\n",

" enc_out = self.predict_linear(enc_out.permute(0, 2, 1)).permute(\n",

" 0, 2, 1) # align temporal dimension [B,pred_len+seq_len,C]\n",

" \n",

" # TimesNet: pass through TimesBlock for self.layer times each with layer normalization\n",

" for i in range(self.layer):\n",

" enc_out = self.layer_norm(self.model[i](enc_out))\n",

"\n",

" # project back #[B,T,d_model]-->[B,T,c_out]\n",

" dec_out = self.projection(enc_out) \n",

"\n",

" # De-Normalization from Non-stationary Transformer\n",

" dec_out = dec_out * \\\n",

" (stdev[:, 0, :].unsqueeze(1).repeat(\n",

" 1, self.pred_len + self.seq_len, 1)) #lengthen the stdev to fit the dec_out\n",

" dec_out = dec_out + \\\n",

" (means[:, 0, :].unsqueeze(1).repeat(\n",

" 1, self.pred_len + self.seq_len, 1)) #lengthen the mean to fit the dec_out\n",

" return dec_out"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### 4.2 Imputation\n",

"\n",

"Imputation is a task aiming at completing some missing value in the time series, so in some degree it's similar to forecast. We can still use the similar step to cope with it."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def imputation(self, x_enc, x_mark_enc, x_dec, x_mark_dec, mask):\n",

" # Normalization from Non-stationary Transformer\n",

" means = torch.sum(x_enc, dim=1) / torch.sum(mask == 1, dim=1)\n",

" means = means.unsqueeze(1).detach()\n",

" x_enc = x_enc - means\n",

" x_enc = x_enc.masked_fill(mask == 0, 0)\n",

" stdev = torch.sqrt(torch.sum(x_enc * x_enc, dim=1) /\n",

" torch.sum(mask == 1, dim=1) + 1e-5)\n",

" stdev = stdev.unsqueeze(1).detach()\n",

" x_enc /= stdev\n",

"\n",

" # embedding\n",

" enc_out = self.enc_embedding(x_enc, x_mark_enc) # [B,T,C]\n",

" # TimesNet\n",

" for i in range(self.layer):\n",

" enc_out = self.layer_norm(self.model[i](enc_out))\n",

" # project back\n",

" dec_out = self.projection(enc_out)\n",

"\n",

" # De-Normalization from Non-stationary Transformer\n",

" dec_out = dec_out * \\\n",

" (stdev[:, 0, :].unsqueeze(1).repeat(\n",

" 1, self.pred_len + self.seq_len, 1))\n",

" dec_out = dec_out + \\\n",

" (means[:, 0, :].unsqueeze(1).repeat(\n",

" 1, self.pred_len + self.seq_len, 1))\n",

" return dec_out"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### 4.3 Anomaly Detection\n",

"\n",

"Similar to Imputation."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def anomaly_detection(self, x_enc):\n",

" # Normalization from Non-stationary Transformer\n",

" means = x_enc.mean(1, keepdim=True).detach()\n",

" x_enc = x_enc - means\n",

" stdev = torch.sqrt(\n",

" torch.var(x_enc, dim=1, keepdim=True, unbiased=False) + 1e-5)\n",

" x_enc /= stdev\n",

" # embedding\n",

" enc_out = self.enc_embedding(x_enc, None) # [B,T,C]\n",

" # TimesNet\n",

" for i in range(self.layer):\n",

" enc_out = self.layer_norm(self.model[i](enc_out))\n",

" # project back\n",

" dec_out = self.projection(enc_out)\n",

" # De-Normalization from Non-stationary Transformer\n",

" dec_out = dec_out * \\\n",

" (stdev[:, 0, :].unsqueeze(1).repeat(\n",

" 1, self.pred_len + self.seq_len, 1))\n",

" dec_out = dec_out + \\\n",

" (means[:, 0, :].unsqueeze(1).repeat(\n",

" 1, self.pred_len + self.seq_len, 1))\n",

" return dec_out"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### 4.4 Classification"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def classification(self, x_enc, x_mark_enc):\n",

" # embedding\n",

" enc_out = self.enc_embedding(x_enc, None) # [B,T,C]\n",

" # TimesNet\n",

" for i in range(self.layer):\n",

" enc_out = self.layer_norm(self.model[i](enc_out))\n",

"\n",

" # Output\n",

" # the output transformer encoder/decoder embeddings don't include non-linearity\n",

" output = self.act(enc_out)\n",

" output = self.dropout(output)\n",

"\n",

" # zero-out padding embeddings:The primary role of x_mark_enc in the code is to \n",

" # zero out the embeddings for padding positions in the output tensor through \n",

" # element-wise multiplication, helping the model to focus on meaningful data \n",

" # while disregarding padding.\n",

" output = output * x_mark_enc.unsqueeze(-1)\n",

" \n",

" # (batch_size, seq_length * d_model)\n",

" output = output.reshape(output.shape[0], -1)\n",

" output = self.projection(output) # (batch_size, num_classes)\n",

" return output"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"In the end, with so many tasks above, we become able to complete `forward(self, x_enc, x_mark_enc, x_dec, x_mark_dec, mask=None):`. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def forward(self, x_enc, x_mark_enc, x_dec, x_mark_dec, mask=None):\n",

" if self.task_name == 'long_term_forecast' or self.task_name == 'short_term_forecast':\n",

" dec_out = self.forecast(x_enc, x_mark_enc, x_dec, x_mark_dec)\n",

" return dec_out[:, -self.pred_len:, :] # [B, L, D] return the predicted part of sequence\n",

" if self.task_name == 'imputation':\n",

" dec_out = self.imputation(\n",

" x_enc, x_mark_enc, x_dec, x_mark_dec, mask)\n",

" return dec_out # [B, L, D] return the whole sequence with missing value estimated\n",

" if self.task_name == 'anomaly_detection':\n",

" dec_out = self.anomaly_detection(x_enc)\n",

" return dec_out # [B, L, D] return the sequence that should be correct\n",

" if self.task_name == 'classification':\n",

" dec_out = self.classification(x_enc, x_mark_enc)\n",

" return dec_out # [B, N] return the classification result\n",

" return None"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### 5. Training and Settings\n",

"\n",

"By now we've successfully build up `TimesNet`. We are now facing the problem how to train and test this neural network. The action of training, validating as well as testing is implemented at __*exp*__ part, in which codes for different tasks are gathered. These experiments are not only for `TimesNet` training, but also feasible for any other time series representation model. But here, we simply use `TimesNet` to analyse.\n",

"\n",

"`TimesNet` is a state-of-art in multiple tasks, while here we would only introduce its training for long-term forecast task, since the backbone of the training process for other tasks is similar to this one. Again, test and validation code can be easily understood once you've aware how the training process works. So first of all, we are going to focus on the training of `TimesNet` on task long-term forecasting.\n",

"\n",

"We will discuss many aspects, including the training process, training loss etc."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### 5.1 Training for Long-term Forecast Task\n",

"\n",

"The following codes represents the process of training model for long-term forecasting task. We'll have a detailed look at it. To make it brief, the training part can be briefly divided into several parts, including Data Preparation, Creating Save Path, Initialization, Optimizer and Loss Function Selection, Using Mixed Precision Training, Training Loop, Validation and Early Stopping, Learning Rate Adjustment, Loading the Best Model.\n",

"\n",

"For more details, please see the code below. 'train' process is defined in the experiment __class Exp_Long_Term_Forecast__."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"import os"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def train(self, setting): #setting is the args for this model training\n",

" #get train dataloader\n",

" train_data, train_loader = self._get_data(flag='train')\n",

" vali_data, vali_loader = self._get_data(flag='val')\n",

" test_data, test_loader = self._get_data(flag='test')\n",

"\n",

" # set path of checkpoint for saving and loading model\n",

" path = os.path.join(self.args.checkpoints, setting)\n",

" if not os.path.exists(path):\n",

" os.makedirs(path)\n",

" time_now = time.time()\n",

"\n",

" train_steps = len(train_loader)\n",

"\n",

" # EarlyStopping is typically a custom class or function that monitors the performance \n",

" # of a model during training, usually by tracking a certain metric (commonly validation \n",

" # loss or accuracy).It's a common technique used in deep learning to prevent overfitting \n",

" # during the training\n",

" early_stopping = EarlyStopping(patience=self.args.patience, verbose=True)\n",

"\n",

" #Optimizer and Loss Function Selection\n",

" model_optim = self._select_optimizer()\n",

" criterion = self._select_criterion()\n",

"\n",

" # AMP training is a technique that uses lower-precision data types (e.g., float16) \n",

" # for certain computations to accelerate training and reduce memory usage.\n",

" if self.args.use_amp: \n",

" scaler = torch.cuda.amp.GradScaler()\n",

" for epoch in range(self.args.train_epochs):\n",

" iter_count = 0\n",

" train_loss = []\n",

" self.model.train()\n",

" epoch_time = time.time()\n",

"\n",

" #begin training in this epoch\n",

" for i, (batch_x, batch_y, batch_x_mark, batch_y_mark) in enumerate(train_loader):\n",

" iter_count += 1\n",

" model_optim.zero_grad()\n",

" batch_x = batch_x.float().to(self.device) #input features\n",

" batch_y = batch_y.float().to(self.device) #target features\n",

"\n",

" # _mark holds information about time-related features. Specifically, it is a \n",

" # tensor that encodes temporal information and is associated with the \n",

" # input data batch_x.\n",

" batch_x_mark = batch_x_mark.float().to(self.device)\n",

" batch_y_mark = batch_y_mark.float().to(self.device)\n",

" # decoder input(didn't use in TimesNet case)\n",

" dec_inp = torch.zeros_like(batch_y[:, -self.args.pred_len:, :]).float()\n",

" dec_inp = torch.cat([batch_y[:, :self.args.label_len, :], dec_inp], dim=1).float().to(self.device)\n",

" # encoder - decoder\n",

" if self.args.use_amp: #in the case of TimesNet, use_amp should be False\n",

" with torch.cuda.amp.autocast():\n",

" # whether to output attention in ecoder,in TimesNet case is no\n",

" if self.args.output_attention: \n",

" outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)[0]\n",

" # model the input\n",

" else:\n",

" outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)\n",

"\n",

" # forecasting task, options:[M, S, MS]; M:multivariate predict multivariate, \n",

" # S:univariate predict univariate, MS:multivariate predict univariate'\n",

" #if multivariate predict univariate',then output should be the last column of the decoder\n",

" # output, so f_dim = -1 to only contain the last column, else is all columns\n",

" f_dim = -1 if self.args.features == 'MS' else 0 \n",

" outputs = outputs[:, -self.args.pred_len:, f_dim:]\n",

" batch_y = batch_y[:, -self.args.pred_len:, f_dim:].to(self.device)\n",

"\n",

" # calc loss\n",

" loss = criterion(outputs, batch_y)\n",

" train_loss.append(loss.item())\n",

" else: #similar to when use_amp is True\n",

" if self.args.output_attention:\n",

" outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)[0]\n",

" else:\n",

" outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)\n",

" f_dim = -1 if self.args.features == 'MS' else 0\n",

" outputs = outputs[:, -self.args.pred_len:, f_dim:]\n",

" batch_y = batch_y[:, -self.args.pred_len:, f_dim:].to(self.device)\n",

" loss = criterion(outputs, batch_y)\n",

" train_loss.append(loss.item())\n",

"\n",

" # When train rounds attain some 100-multiple, print speed, left time, loss. etc feedback\n",

" if (i + 1) % 100 == 0:\n",

" print(\"\\titers: {0}, epoch: {1} | loss: {2:.7f}\".format(i + 1, epoch + 1, loss.item()))\n",

" speed = (time.time() - time_now) / iter_count\n",

" left_time = speed * ((self.args.train_epochs - epoch) * train_steps - i)\n",

" print('\\tspeed: {:.4f}s/iter; left time: {:.4f}s'.format(speed, left_time))\n",

" iter_count = 0\n",

" time_now = time.time()\n",

"\n",

" #BP\n",

" if self.args.use_amp:\n",

" scaler.scale(loss).backward()\n",

" scaler.step(model_optim)\n",

" scaler.update()\n",

" else:\n",

" loss.backward()\n",

" model_optim.step()\n",

" \n",

" #This epoch comes to end, print information\n",

" print(\"Epoch: {} cost time: {}\".format(epoch + 1, time.time() - epoch_time))\n",

" train_loss = np.average(train_loss)\n",

"\n",

" #run test and validation on current model\n",

" vali_loss = self.vali(vali_data, vali_loader, criterion)\n",

" test_loss = self.vali(test_data, test_loader, criterion)\n",

"\n",

" #print train, test, vali loss information\n",

" print(\"Epoch: {0}, Steps: {1} | Train Loss: {2:.7f} Vali Loss: {3:.7f} Test Loss: {4:.7f}\".format(\n",

" epoch + 1, train_steps, train_loss, vali_loss, test_loss))\n",

" \n",

" #Decide whether to trigger Early Stopping. if early_stop is true, it means that \n",

" #this epoch's training is now at a flat slope, so stop further training for this epoch.\n",

" early_stopping(vali_loss, self.model, path)\n",

" if early_stopping.early_stop:\n",

" print(\"Early stopping\")\n",

" break\n",

"\n",

" #adjust learning keys\n",

" adjust_learning_rate(model_optim, epoch + 1, self.args)\n",

" best_model_path = path + '/' + 'checkpoint.pth'\n",

"\n",

" # loading the trained model's state dictionary from a saved checkpoint file \n",

" # located at best_model_path.\n",

" self.model.load_state_dict(torch.load(best_model_path))\n",

" return self.model"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"If you want to learn more, please see it at exp/exp_long_term_forecasting.py"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### 5.2 Early Stopping Mechanism\n",

"\n",

"__EarlyStopping__ is typically a custom class or function that monitors the performance of a model during training, usually by tracking a certain metric (commonly validation loss or accuracy).It's a common technique used in deep learning to prevent overfitting during the training.\n",

"\n",

"Let's see the code below(original code is in `tools.py`)."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"class EarlyStopping:\n",

" def __init__(self, patience=7, verbose=False, delta=0):\n",

" self.patience = patience # how many times will you tolerate for loss not being on decrease\n",

" self.verbose = verbose # whether to print tip info\n",

" self.counter = 0 # now how many times loss not on decrease\n",

" self.best_score = None\n",

" self.early_stop = False\n",

" self.val_loss_min = np.Inf\n",

" self.delta = delta\n",

"\n",

" def __call__(self, val_loss, model, path):\n",

" score = -val_loss\n",

" if self.best_score is None:\n",

" self.best_score = score\n",

" self.save_checkpoint(val_loss, model, path)\n",

"\n",

" # meaning: current score is not 'delta' better than best_score, representing that \n",

" # further training may not bring remarkable improvement in loss. \n",

" elif score < self.best_score + self.delta: \n",

" self.counter += 1\n",

" print(f'EarlyStopping counter: {self.counter} out of {self.patience}')\n",

" # 'No Improvement' times become higher than patience --> Stop Further Training\n",

" if self.counter >= self.patience:\n",

" self.early_stop = True\n",

"\n",

" else: #model's loss is still on decrease, save the now best model and go on training\n",

" self.best_score = score\n",

" self.save_checkpoint(val_loss, model, path)\n",

" self.counter = 0\n",

"\n",

" def save_checkpoint(self, val_loss, model, path):\n",

" ### used for saving the current best model\n",

" if self.verbose:\n",

" print(f'Validation loss decreased ({self.val_loss_min:.6f} --> {val_loss:.6f}). Saving model ...')\n",

" torch.save(model.state_dict(), path + '/' + 'checkpoint.pth')\n",

" self.val_loss_min = val_loss"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### 5.3 Optimizer and Criterion\n",

"\n",

"The optimizer and criterion are defined in __class Exp_Long_Term_Forecast__ and called in the training process by function `self._select_optimizer()` and `self._select_criterion()`. Here, for long-term forecasting task, we simply adopt Adam optimizer and MSELoss to meature the loss between real data and predicted ones."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def _select_optimizer(self):\n",

" model_optim = optim.Adam(self.model.parameters(), lr=self.args.learning_rate)\n",

" return model_optim\n",

"\n",

"def _select_criterion(self):\n",

" criterion = nn.MSELoss()\n",

" return criterion"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### 5.4 Automatic Mixed Precision(AMP)\n",

"\n",

"AMP is a technique used in deep learning to improve training speed and reduce memory usage. AMP achieves this by mixing calculations in half-precision (16-bit floating-point) and single-precision (32-bit floating-point).\n",

"\n",

"Let's have a closer look on this snippet:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"#in forward process:\n",

"with torch.cuda.amp.autocast():\n",

"\n",

"...\n",

"\n",

"#in BP process:\n",

"if self.args.use_amp:\n",

" scaler.scale(loss).backward()\n",

" scaler.step(model_optim)\n",

" scaler.update()\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"` with torch.cuda.amp.autocast():` : The purpose of using torch.cuda.amp.autocast() is to take advantage of the speed and memory efficiency benefits of mixed-precision training while maintaining numerical stability. Some deep learning models can benefit significantly from this technique, especially on modern GPUs with hardware support for half-precision arithmetic. It allows you to perform certain calculations more quickly while still ensuring that critical calculations (e.g., gradient updates) are performed with sufficient precision to avoid loss of accuracy.\n",

"\n",

"`scaler.scale(loss).backward()`: If AMP is enabled, it uses a scaler object created with torch.cuda.amp.GradScaler() to automatically scale the loss and perform backward propagation. This is a crucial part of AMP, ensuring numerical stability. Before backpropagation, the loss is scaled to an appropriate range to prevent gradients from diverging too quickly or causing numerical instability.\n",

"\n",

"`scaler.step(model_optim)`: Next, the scaler calls the step method, which applies the scaled gradients to the model's optimizer (model_optim). This is used to update the model's weights to minimize the loss function.\n",

"\n",

"`scaler.update()`: Finally, the scaler calls the update method, which updates the scaling factor to ensure correct scaling of the loss for the next iteration. This step helps dynamically adjust the scaling of gradients to adapt to different training scenarios."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### 5.5 Learning Rate Adjustment\n",

"\n",

"While the optimizer are responsible for adapting the learning rate with epochs, we would still like to do some adjustment on it manually, as indicated in the function `adjust_learning_rate(model_optim, epoch + 1, self.args)`, whose codes are shown below(original code is in `tools.py`): "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def adjust_learning_rate(optimizer, epoch, args):\n",

"\n",

" #first type: learning rate decrease with epoch by exponential\n",

" if args.lradj == 'type1':\n",

" lr_adjust = {epoch: args.learning_rate * (0.5 ** ((epoch - 1) // 1))}\n",

"\n",

" #second type: learning rate decrease manually\n",

" elif args.lradj == 'type2':\n",

" lr_adjust = {\n",

" 2: 5e-5, 4: 1e-5, 6: 5e-6, 8: 1e-6,\n",

" 10: 5e-7, 15: 1e-7, 20: 5e-8\n",

" }\n",

"\n",

" #1st type: update in each epoch\n",

" #2nd type: only update in epochs that are written in Dict lr_adjust\n",

" if epoch in lr_adjust.keys():\n",

" lr = lr_adjust[epoch]\n",

" \n",

" # change the learning rate for different parameter groups within the optimizer\n",

" for param_group in optimizer.param_groups:\n",

" param_group['lr'] = lr\n",

" print('Updating learning rate to {}'.format(lr))"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### 6. Validation and Testing\n",

"\n",

"During training, the model continuously adjusts its weights and parameters to minimize training error. However, this may not reflect the model's performance on unseen data. Validation allows us to periodically assess the model's performance on data that is different from the training data, providing insights into the model's generalization ability.\n",

"\n",

"By comparing performance on the validation set, we can identify whether the model is overfitting. Overfitting occurs when a model performs well on training data but poorly on unseen data. Monitoring performance on the validation set helps detect overfitting early and take measures to prevent it, such as early stopping or adjusting hyperparameters.\n",

"\n",

"Here, we still take long-term forecasting as an example, similar to train process:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def vali(self, vali_data, vali_loader, criterion):\n",

" total_loss = []\n",

"\n",

" #evaluation mode\n",

" self.model.eval()\n",

" with torch.no_grad():\n",

" for i, (batch_x, batch_y, batch_x_mark, batch_y_mark) in enumerate(vali_loader):\n",

" batch_x = batch_x.float().to(self.device)\n",

" batch_y = batch_y.float()\n",

"\n",

" batch_x_mark = batch_x_mark.float().to(self.device)\n",

" batch_y_mark = batch_y_mark.float().to(self.device)\n",

"\n",

" # decoder input\n",

" dec_inp = torch.zeros_like(batch_y[:, -self.args.pred_len:, :]).float()\n",

" dec_inp = torch.cat([batch_y[:, :self.args.label_len, :], dec_inp], dim=1).float().to(self.device)\n",

" # encoder - decoder\n",

" if self.args.use_amp:\n",

" with torch.cuda.amp.autocast():\n",

" if self.args.output_attention:\n",

" outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)[0]\n",

" else:\n",

" outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)\n",

" else:\n",

" if self.args.output_attention:\n",

" outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)[0]\n",

" else:\n",

" outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)\n",

" f_dim = -1 if self.args.features == 'MS' else 0\n",

" outputs = outputs[:, -self.args.pred_len:, f_dim:]\n",

" batch_y = batch_y[:, -self.args.pred_len:, f_dim:].to(self.device)\n",

"\n",

" pred = outputs.detach().cpu()\n",

" true = batch_y.detach().cpu()\n",

"\n",

" loss = criterion(pred, true)\n",

"\n",

" total_loss.append(loss)\n",

" total_loss = np.average(total_loss)\n",

" self.model.train()\n",

" return total_loss"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Testing is similar to validation, but it's purpose is to examine how well the model behaves, so it's common to add some visualization with __matplotlib.pyplot__. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"import matplotlib.pyplot as plt\n",

"\n",

"def visual(true, preds=None, name='./pic/test.pdf'):\n",

" \"\"\"\n",

" Results visualization\n",

" \"\"\"\n",

" plt.figure()\n",

" plt.plot(true, label='GroundTruth', linewidth=2)\n",

" if preds is not None:\n",

" plt.plot(preds, label='Prediction', linewidth=2)\n",

" plt.legend()\n",

" plt.savefig(name, bbox_inches='tight')"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def test(self, setting, test=0):\n",

" test_data, test_loader = self._get_data(flag='test')\n",

" if test:\n",

" print('loading model')\n",

" self.model.load_state_dict(torch.load(os.path.join('./checkpoints/' + setting, 'checkpoint.pth')))\n",

"\n",

" preds = []\n",

" trues = []\n",

" folder_path = './test_results/' + setting + '/'\n",

" if not os.path.exists(folder_path):\n",

" os.makedirs(folder_path)\n",

"\n",

" self.model.eval()\n",

" with torch.no_grad():\n",

" for i, (batch_x, batch_y, batch_x_mark, batch_y_mark) in enumerate(test_loader):\n",

" batch_x = batch_x.float().to(self.device)\n",

" batch_y = batch_y.float().to(self.device)\n",

"\n",

" batch_x_mark = batch_x_mark.float().to(self.device)\n",

" batch_y_mark = batch_y_mark.float().to(self.device)\n",

"\n",

" # decoder input\n",

" dec_inp = torch.zeros_like(batch_y[:, -self.args.pred_len:, :]).float()\n",

" dec_inp = torch.cat([batch_y[:, :self.args.label_len, :], dec_inp], dim=1).float().to(self.device)\n",

" # encoder - decoder\n",

" if self.args.use_amp:\n",

" with torch.cuda.amp.autocast():\n",

" if self.args.output_attention:\n",

" outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)[0]\n",

" else:\n",

" outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)\n",

" else:\n",

" if self.args.output_attention:\n",

" outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)[0]\n",

"\n",

" else:\n",

" outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)\n",

"\n",

" f_dim = -1 if self.args.features == 'MS' else 0\n",

" outputs = outputs[:, -self.args.pred_len:, f_dim:]\n",

" batch_y = batch_y[:, -self.args.pred_len:, f_dim:].to(self.device)\n",

" outputs = outputs.detach().cpu().numpy()\n",

" batch_y = batch_y.detach().cpu().numpy()\n",

"\n",

" #inverse the data if scaled\n",

" if test_data.scale and self.args.inverse:\n",

" outputs = test_data.inverse_transform(outputs)\n",

" batch_y = test_data.inverse_transform(batch_y)\n",

"\n",

" pred = outputs\n",

" true = batch_y\n",

"\n",

" preds.append(pred)\n",

" trues.append(true)\n",

"\n",

" #visualize one piece of data every 20\n",

" if i % 20 == 0:\n",

" input = batch_x.detach().cpu().numpy()\n",

" #the whole sequence\n",

" gt = np.concatenate((input[0, :, -1], true[0, :, -1]), axis=0)\n",

" pd = np.concatenate((input[0, :, -1], pred[0, :, -1]), axis=0)\n",

" visual(gt, pd, os.path.join(folder_path, str(i) + '.pdf'))\n",

"\n",

" preds = np.array(preds)\n",

" trues = np.array(trues) # shape[batch_num, batch_size, pred_len, features]\n",

" print('test shape:', preds.shape, trues.shape)\n",

" preds = preds.reshape(-1, preds.shape[-2], preds.shape[-1])\n",

" trues = trues.reshape(-1, trues.shape[-2], trues.shape[-1])\n",

" print('test shape:', preds.shape, trues.shape)\n",

"\n",

" # result save\n",

" folder_path = './results/' + setting + '/'\n",

" if not os.path.exists(folder_path):\n",

" os.makedirs(folder_path)\n",

"\n",

" mae, mse, rmse, mape, mspe = metric(preds, trues)\n",

" print('mse:{}, mae:{}'.format(mse, mae))\n",

" f = open(\"result_long_term_forecast.txt\", 'a')\n",

" f.write(setting + \" \\n\")\n",

" f.write('mse:{}, mae:{}'.format(mse, mae))\n",

" f.write('\\n')\n",

" f.write('\\n')\n",

" f.close()\n",

" \n",

" np.save(folder_path + 'metrics.npy', np.array([mae, mse, rmse, mape, mspe]))\n",

" np.save(folder_path + 'pred.npy', preds)\n",

" np.save(folder_path + 'true.npy', trues)\n",

"\n",

" return\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### 7. Dataloader and DataProvider\n",

"\n",

"In the process of training, we simply take the dataloader for granted, by the function `self._get_data(flag='train')`. So how does this line work? Have a look at the definition(in __class Exp_Long_Term_Forecast__):"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def _get_data(self, flag):\n",

" data_set, data_loader = data_provider(self.args, flag)\n",

" return data_set, data_loader"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"One step forward, see `data_provider(self.args, flag)`(in `data_factory.py`):"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# Below are some dataloaders defined in data_loader.py. If you want to add your own data, \n",

"# go and check data_loader.py to rewrite a dataloader to fit your data.\n",

"data_dict = {\n",

" 'ETTh1': Dataset_ETT_hour,\n",

" 'ETTh2': Dataset_ETT_hour,\n",

" 'ETTm1': Dataset_ETT_minute,\n",

" 'ETTm2': Dataset_ETT_minute,\n",

" 'custom': Dataset_Custom,\n",

" 'm4': Dataset_M4,\n",

" 'PSM': PSMSegLoader,\n",

" 'MSL': MSLSegLoader,\n",

" 'SMAP': SMAPSegLoader,\n",

" 'SMD': SMDSegLoader,\n",

" 'SWAT': SWATSegLoader,\n",

" 'UEA': UEAloader\n",

"}\n",

"\n",

"\n",

"def data_provider(args, flag):\n",

" Data = data_dict[args.data] #data_provider\n",

"\n",

" # time features encoding, options:[timeF, fixed, learned]\n",

" timeenc = 0 if args.embed != 'timeF' else 1\n",

"\n",

" #test data provider\n",

" if flag == 'test':\n",

" shuffle_flag = False\n",

" drop_last = True\n",

" if args.task_name == 'anomaly_detection' or args.task_name == 'classification':\n",

" batch_size = args.batch_size\n",

"\n",

" #Some tasks during the testing phase may require evaluating samples one at a time. \n",

" # This could be due to variations in sample sizes in the test data or because the \n",

" # evaluation process demands finer-grained results or different processing. \n",

" else:\n",

" batch_size = 1 # bsz=1 for evaluation\n",

"\n",

" #freq for time features encoding, \n",

" # options:[s:secondly, t:minutely, h:hourly, d:daily, b:business days, w:weekly,\n",

" # m:monthly], you can also use more detailed freq like 15min or 3h')\n",

" freq = args.freq\n",

" else:\n",

" shuffle_flag = True\n",

" drop_last = True\n",

" batch_size = args.batch_size # bsz for train and valid\n",

" freq = args.freq\n",

"\n",

" if args.task_name == 'anomaly_detection':\n",

" drop_last = False\n",

" data_set = Data(\n",

" root_path=args.root_path, #root path of the data file\n",

" win_size=args.seq_len, #input sequence length\n",

" flag=flag,\n",

" )\n",

" print(flag, len(data_set))\n",

" data_loader = DataLoader(\n",

" data_set,\n",

" batch_size=batch_size,\n",

" shuffle=shuffle_flag,\n",

" num_workers=args.num_workers,#data loader num workers\n",

" drop_last=drop_last)\n",

" return data_set, data_loader\n",

"\n",

" elif args.task_name == 'classification':\n",

" drop_last = False\n",

" data_set = Data(\n",

" root_path=args.root_path,\n",

" flag=flag,\n",

" )\n",

"\n",

" data_loader = DataLoader(\n",

" data_set,\n",

" batch_size=batch_size,\n",

" shuffle=shuffle_flag,\n",

" num_workers=args.num_workers,\n",

" drop_last=drop_last,\n",

" collate_fn=lambda x: collate_fn(x, max_len=args.seq_len) \n",

" #define some limits to collate pieces of data into batches\n",

" )\n",

" return data_set, data_loader\n",

" else:\n",

" if args.data == 'm4':\n",

" drop_last = False\n",

" data_set = Data(\n",

" root_path=args.root_path, #eg. ./data/ETT/\n",

" data_path=args.data_path, #eg. ETTh1.csv\n",

" flag=flag,\n",

" size=[args.seq_len, args.label_len, args.pred_len],\n",

" features=args.features, #forecasting task, options:[M, S, MS]; \n",

" # M:multivariate predict multivariate, S:univariate predict univariate,\n",

" # MS:multivariate predict univariate\n",

" \n",

" target=args.target, #target feature in S or MS task\n",

" timeenc=timeenc,\n",

" freq=freq,\n",

" seasonal_patterns=args.seasonal_patterns\n",

" )\n",

" print(flag, len(data_set))\n",

" data_loader = DataLoader(\n",

" data_set,\n",

" batch_size=batch_size,\n",

" shuffle=shuffle_flag,\n",

" num_workers=args.num_workers,\n",

" drop_last=drop_last)\n",

" return data_set, data_loader\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"From above, it's easy to find that data_provider is responsible for collate the dataset into batches according to different tasks and running mode. It passes the parameters to dataloader(`Data`) to instruct it how to manage a data file into pieces of usable data. Then it also generates the final dara_loader by passing the built-up dataset and some other params to the standard class Dataloader. After that, a dataset that fits the need of the model and a enumerable dataloader are generated. \n",

"\n",

"So how to organize the data file into pieces of data that fits the model? Let's see `data_loader.py`! There are many dataloaders in it, and of course you can write your own dataloader, but here we'll only focus on __class Dataset_ETT_hour(Dataset)__ as an example."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"class Dataset_ETT_hour(Dataset):\n",

" def __init__(self, root_path, flag='train', size=None,\n",

" features='S', data_path='ETTh1.csv',\n",

" target='OT', scale=True, timeenc=0, freq='h', seasonal_patterns=None):\n",

" ... \n",

" def __read_data__(self):\n",

" ... \n",

" def __getitem__(self, index):\n",

" ...\n",

" \n",

" def __len__(self):\n",

" ...\n",

" \n",

" def inverse_transform(self, data):\n",

" ..."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"`__init__()` is the constructor used to initialize various parameters and attributes of the dataset. It takes a series of arguments, including the path to the data file, the dataset's flag (e.g., train, validate, test), dataset size, feature type, target variable, whether to scale the data, time encoding, time frequency, and more. These parameters are used to configure how the dataset is loaded and processed."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def __init__(self, root_path, flag='train', size=None,\n",

" features='S', data_path='ETTh1.csv',\n",

" target='OT', scale=True, timeenc=0, freq='h', seasonal_patterns=None):\n",

" # size [seq_len, label_len, pred_len]\n",

" # info\n",

" if size == None:\n",

" self.seq_len = 24 * 4 * 4\n",

" self.label_len = 24 * 4\n",

" self.pred_len = 24 * 4\n",

" else:\n",

" self.seq_len = size[0]\n",

" self.label_len = size[1]\n",

" self.pred_len = size[2]\n",

" # init\n",

" assert flag in ['train', 'test', 'val']\n",

" type_map = {'train': 0, 'val': 1, 'test': 2}\n",

" self.set_type = type_map[flag]\n",

" self.features = features\n",

" self.target = target\n",

" self.scale = scale\n",

" self.timeenc = timeenc\n",

" self.freq = freq\n",

" self.root_path = root_path\n",

" self.data_path = data_path\n",

" \n",

" # After initialization, call __read_data__() to manage the data file.\n",

" self.__read_data__()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The actual process of managing data file into usable data pieces happens in `__read_data__()`, see below:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def __read_data__(self):\n",

" self.scaler = StandardScaler()\n",

"\n",

" #get raw data from path\n",

" df_raw = pd.read_csv(os.path.join(self.root_path,\n",

" self.data_path))\n",

"\n",

" # split data set into train, vali, test. border1 is the left border and border2 is the right.\n",

" # Once flag(train, vali, test) is determined, __read_data__ will return certain part of the dataset.\n",

" border1s = [0, 12 * 30 * 24 - self.seq_len, 12 * 30 * 24 + 4 * 30 * 24 - self.seq_len]\n",

" border2s = [12 * 30 * 24, 12 * 30 * 24 + 4 * 30 * 24, 12 * 30 * 24 + 8 * 30 * 24]\n",

" border1 = border1s[self.set_type]\n",

" border2 = border2s[self.set_type]\n",

"\n",

" #decide which columns to select\n",

" if self.features == 'M' or self.features == 'MS':\n",

" cols_data = df_raw.columns[1:] # column name list (remove 'date')\n",

" df_data = df_raw[cols_data] #remove the first column, which is time stamp info\n",

" elif self.features == 'S':\n",

" df_data = df_raw[[self.target]] # target column\n",

"\n",

" #scale data by the scaler that fits training data\n",

" if self.scale:\n",

" train_data = df_data[border1s[0]:border2s[0]]\n",

" #train_data.values: turn pandas DataFrame into 2D numpy\n",

" self.scaler.fit(train_data.values) \n",

" data = self.scaler.transform(df_data.values)\n",

" else:\n",

" data = df_data.values \n",

" \n",

" #time stamp:df_stamp is a object of and\n",

" # has one column called 'date' like 2016-07-01 00:00:00\n",

" df_stamp = df_raw[['date']][border1:border2]\n",

" \n",

" # Since the date format is uncertain across different data file, we need to \n",

" # standardize it so we call func 'pd.to_datetime'\n",

" df_stamp['date'] = pd.to_datetime(df_stamp.date) \n",

"\n",

" if self.timeenc == 0: #time feature encoding is fixed or learned\n",

" df_stamp['month'] = df_stamp.date.apply(lambda row: row.month, 1)\n",

" df_stamp['day'] = df_stamp.date.apply(lambda row: row.day, 1)\n",

" df_stamp['weekday'] = df_stamp.date.apply(lambda row: row.weekday(), 1)\n",

" df_stamp['hour'] = df_stamp.date.apply(lambda row: row.hour, 1)\n",

" #now df_frame has multiple columns recording the month, day etc. time stamp\n",

" # next we delete the 'date' column and turn 'DataFrame' to a list\n",

" data_stamp = df_stamp.drop(['date'], 1).values\n",

"\n",

" elif self.timeenc == 1: #time feature encoding is timeF\n",

" '''\n",

" when entering this branch, we choose arg.embed as timeF meaning we want to \n",

" encode the temporal info. 'freq' should be the smallest time step, and has \n",

" options:[s:secondly, t:minutely, h:hourly, d:daily, b:business days, w:weekly, m:monthly], you can also use more detailed freq like 15min or 3h')\n",

" So you should check the timestep of your data and set 'freq' arg. \n",

" After the time_features encoding, each date info format will be encoded into \n",

" a list, with each element denoting the relative position of this time point\n",

" (e.g. Day of Week, Day of Month, Hour of Day) and each normalized within scope[-0.5, 0.5]\n",

" '''\n",

" data_stamp = time_features(pd.to_datetime(df_stamp['date'].values), freq=self.freq)\n",

" data_stamp = data_stamp.transpose(1, 0)\n",

" \n",

" \n",

" # data_x and data_y are same copy of a certain part of data\n",

" self.data_x = data[border1:border2]\n",

" self.data_y = data[border1:border2]\n",

" self.data_stamp = data_stamp"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"`__read_data__()` splits the dataset into 3 parts, selects the needed columns and manages time stamp info. It gives out the well-managed data array for later use. Next, we have to finish the overload of __class Dataset__, see `__getitem__(self, index)` and `__len__(self)`:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def __getitem__(self, index):\n",

" #given an index, calculate the positions after this index to truncate the dataset\n",

" s_begin = index\n",

" s_end = s_begin + self.seq_len\n",

" r_begin = s_end - self.label_len\n",

" r_end = r_begin + self.label_len + self.pred_len\n",

"\n",

" #input and output sequence\n",

" seq_x = self.data_x[s_begin:s_end]\n",

" seq_y = self.data_y[r_begin:r_end]\n",

"\n",

" #time mark\n",

" seq_x_mark = self.data_stamp[s_begin:s_end]\n",

" seq_y_mark = self.data_stamp[r_begin:r_end]\n",

"\n",

" return seq_x, seq_y, seq_x_mark, seq_y_mark\n",

"\n",

"def __len__(self):\n",

" return len(self.data_x) - self.seq_len - self.pred_len + 1"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"You can also add an inverse_transform for scaler if needed."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"def inverse_transform(self, data):\n",

" return self.scaler.inverse_transform(data)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

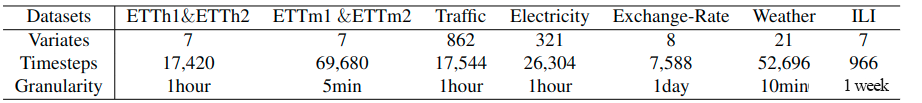

"By now, we have finished constructing the dataset and dataloader. If you want to construct your own data and run it on the net, you can find proper data and try to accomplish the functions listed above. Here are some widely used datasets in times series analysis.\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### 8. Running the Experiment and Visualizing Result\n",

"\n",

"After managing the data, model well, we need to write a shell script for the experiment. In the script, we need to run `run.py` with several arguments, which is part of the configuration. Here, let's see `TimesNet` on task long-term forecast with dataset ETTh1 for example."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"vscode": {

"languageId": "shellscript"

}

},

"outputs": [],

"source": [

"model_name=TimesNet\n",

"\n",

"\n",

"python -u run.py \\\n",

" --task_name long_term_forecast \\\n",

" --is_training 1 \\\n",

" --root_path ./dataset/ETT-small/ \\\n",

" --data_path ETTh1.csv \\\n",

" --model_id ETTh1_96_96 \\\n",

" --model $model_name \\\n",

" --data ETTh1 \\\n",

" --features M \\\n",

" --seq_len 96 \\\n",

" --label_len 48 \\\n",

" --pred_len 96 \\\n",

" --e_layers 2 \\\n",

" --d_layers 1 \\\n",

" --factor 3 \\\n",

" --enc_in 7 \\\n",

" --dec_in 7 \\\n",

" --c_out 7 \\\n",

" --d_model 16 \\\n",

" --d_ff 32 \\\n",

" --des 'Exp' \\\n",

" --itr 1 \\\n",

" --top_k 5\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"After finishing the shell script, you can run it in shell using bash. For example, you can run the following command, for `TimesNet` ETTh1 long-term forecast:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"vscode": {

"languageId": "shellscript"

}

},

"outputs": [],

"source": [

"bash ./scripts/long_term_forecast/ETT_script/TimesNet_ETTh1.sh"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Here, the bash command may not be successfully implemented due to a lack of proper packages in the environment. If that's the case, simply follow the error information to install the missing package step by step until you achieve success. The sign of a successful experiment running is that information about the experiment is printed out, such as:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"vscode": {

"languageId": "plaintext"

}

},

"outputs": [],

"source": [

"Namespace(task_name='long_term_forecast', is_training=1, model_id='ETTh1_96_96', model='TimesNet', data='ETTh1', root_path='./dataset/ETT-small/', data_path='ETTh1.csv', features='M', target='OT', freq='h', checkpoints='./checkpoints/', seq_len=96, label_len=48, pred_len=96, seasonal_patterns='Monthly', inverse=False, mask_rate=0.25, anomaly_ratio=0.25, top_k=5, num_kernels=6, enc_in=7, dec_in=7, c_out=7, d_model=16, n_heads=8, e_layers=2, d_layers=1, d_ff=32, moving_avg=25, factor=3, distil=True, dropout=0.1, embed='timeF', activation='gelu', output_attention=False, num_workers=10, itr=1, train_epochs=10, batch_size=32, patience=3, learning_rate=0.0001, des='Exp', loss='MSE', lradj='type1', use_amp=False, use_gpu=False, gpu=0, use_multi_gpu=False, devices='0,1,2,3', p_hidden_dims=[128, 128], p_hidden_layers=2)\n",

"Use GPU: cuda:0\n",

">>>>>>>start training : long_term_forecast_ETTh1_96_96_TimesNet_ETTh1_ftM_sl96_ll48_pl96_dm16_nh8_el2_dl1_df32_fc3_ebtimeF_dtTrue_Exp_0>>>>>>>>>>>>>>>>>>>>>>>>>>\n",

"train 8449\n",

"val 2785\n",

"test 2785"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Then, the model starts training. Once one epoch finishes training, information like below will be printer out:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"vscode": {

"languageId": "plaintext"

}

},

"outputs": [],

"source": [

" iters: 100, epoch: 1 | loss: 0.4701951\n",

" speed: 0.2108s/iter; left time: 535.7317s\n",

" iters: 200, epoch: 1 | loss: 0.4496171\n",

" speed: 0.0615s/iter; left time: 150.0223s\n",

"Epoch: 1 cost time: 30.09317970275879\n",

"Epoch: 1, Steps: 264 | Train Loss: 0.4964185 Vali Loss: 0.8412074 Test Loss: 0.4290483\n",

"Validation loss decreased (inf --> 0.841207). Saving model ...\n",

"Updating learning rate to 0.0001"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"When all epochs are done, the model steps into testing. The following information about testing will be printed out, giving the MAE and MSE of test."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"vscode": {

"languageId": "plaintext"

}

},

"outputs": [],

"source": [

">>>>>>>testing : long_term_forecast_ETTh1_96_96_TimesNet_ETTh1_ftM_sl96_ll48_pl96_dm16_nh8_el2_dl1_df32_fc3_ebtimeF_dtTrue_Exp_0<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<\n",

"test 2785\n",

"test shape: (2785, 1, 96, 7) (2785, 1, 96, 7)\n",

"test shape: (2785, 96, 7) (2785, 96, 7)\n",

"mse:0.3890332877635956, mae:0.41201362013816833"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

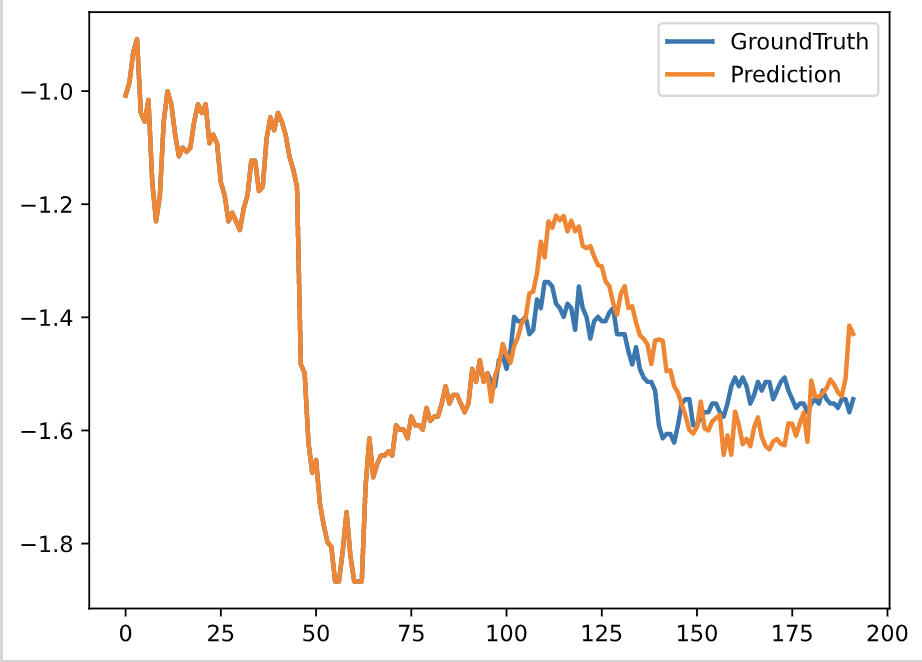

"After test finishes, some visible information are already stored in the test_results folder in PDF format. For example:\n",

"\n",

""

]

}

],

"metadata": {

"language_info": {

"name": "python"

},

"orig_nbformat": 4

},

"nbformat": 4,

"nbformat_minor": 2

}